Read the Docs: Documentation Simplified¶

Read the Docs simplifies software documentation by building, versioning, and hosting of your docs, automatically. This enables many “docs like code” workflows, keeping your code & documentation as close as possible.

- Never out of sync 🔄

Whenever you push code to your favorite version control system, whether that is Git or Mercurial, Read the Docs will automatically build your docs so your code and documentation are always up-to-date. Read more about VCS Integrations.

- Multiple versions 🗂️

Read the Docs can host and build multiple versions of your docs so having a 1.0 version of your docs and a 2.0 version of your docs is as easy as having a separate branch or tag in your version control system. Read more about Versioned Documentation.

- Open Source and User Focused 💓

Our code is free and open source. Our company is bootstrapped and 100% user focused. Read the Docs Community hosts documentation for over 100,000 large and small open source projects, in almost every human and computer language. Read the Docs for Business supports hundreds of organizations with product and internal documentation.

You can find out more about our all the Main Features in these pages.

First steps¶

Are you new to software documentation or are you looking to use your existing docs with Read the Docs? Learn about documentation authoring tools such as Sphinx and MkDocs to help you create fantastic documentation for your project.

Tutorial: Read the Docs tutorial

Getting started: With Sphinx | With MkDocs | Choosing Between Our Two Platforms

Importing your existing documentation: Import guide

Read the Docs tutorial¶

In this tutorial you will create a documentation project on Read the Docs by importing an Sphinx project from a GitHub repository, tailor its configuration, and explore several useful features of the platform.

The tutorial is aimed at people interested in learning how to use Read the Docs to host their documentation projects. You will fork a fictional software library similar to the one developed in the official Sphinx tutorial. No prior experience with Sphinx is required, and you can follow this tutorial without having done the Sphinx one.

The only things you will need to follow are a web browser, an Internet connection, and a GitHub account (you can register for a free account if you don’t have one). You will use Read the Docs Community, which means that the project will be public.

Getting started¶

Preparing your project on GitHub¶

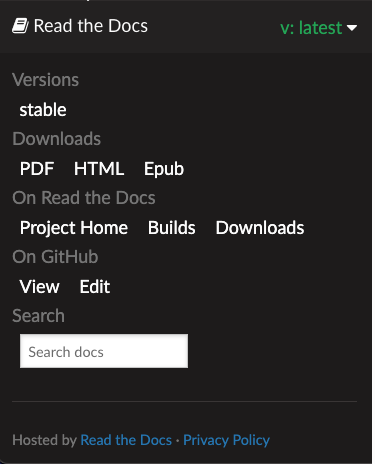

To start, sign in to GitHub and navigate to the tutorial GitHub template, where you will see a green Use this template button. Click it to open a new page that will ask you for some details:

Leave the default “Owner”, or change it to something better for a tutorial project.

Introduce an appropriate “Repository name”, for example

rtd-tutorial.Make sure the project is “Public”, rather than “Private”.

After that, click on the green Create repository from template button, which will generate a new repository on your personal account (or the one of your choosing). This is the repository you will import on Read the Docs, and it contains the following files:

README.rstBasic description of the repository, you will leave it untouched.

pyproject.tomlPython project metadata that makes it installable. Useful for automatic documentation generation from sources.

lumache.pySource code of the fictional Python library.

docs/Directory holding all the Sphinx documentation sources, including some required dependencies in

docs/requirements.txt, the Sphinx configurationdocs/source/conf.py, and the root documentdocs/source/index.rstwritten in reStructuredText.

GitHub template for the tutorial¶

Sign up for Read the Docs¶

To sign up for a Read the Docs account, navigate to the Sign Up page and choose the option Sign up with GitHub. On the authorization page, click the green Authorize readthedocs button.

GitHub authorization page¶

Note

Read the Docs needs elevated permissions to perform certain operations that ensure that the workflow is as smooth as possible, like installing webhooks. If you want to learn more, check out Permissions for connected accounts.

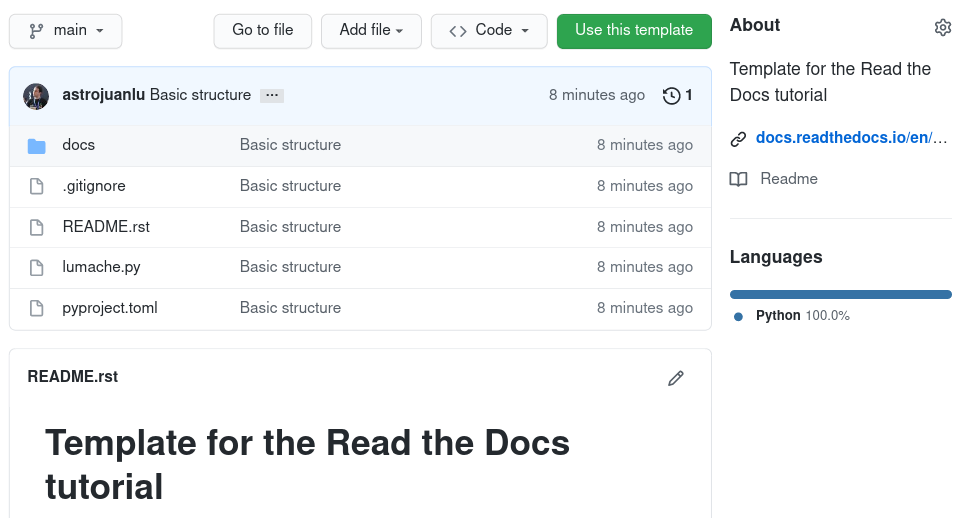

After that, you will be redirected to Read the Docs, where you will need to confirm your e-mail and username. Clicking the Sign Up » button will create your account and redirect you to your dashboard.

By now, you should have two email notifications:

One from GitHub, telling you that “A third-party OAuth application … was recently authorized to access your account”. You don’t need to do anything about it.

Another one from Read the Docs, prompting you to “verify your email address”. Click on the link to finalize the process.

Finally, you created your account on Read the Docs and are ready to import your first project.

Welcome!

Read the Docs empty dashboard¶

Note

Our commercial site offers some extra features, like support for private projects. You can learn more about our two different sites.

First steps¶

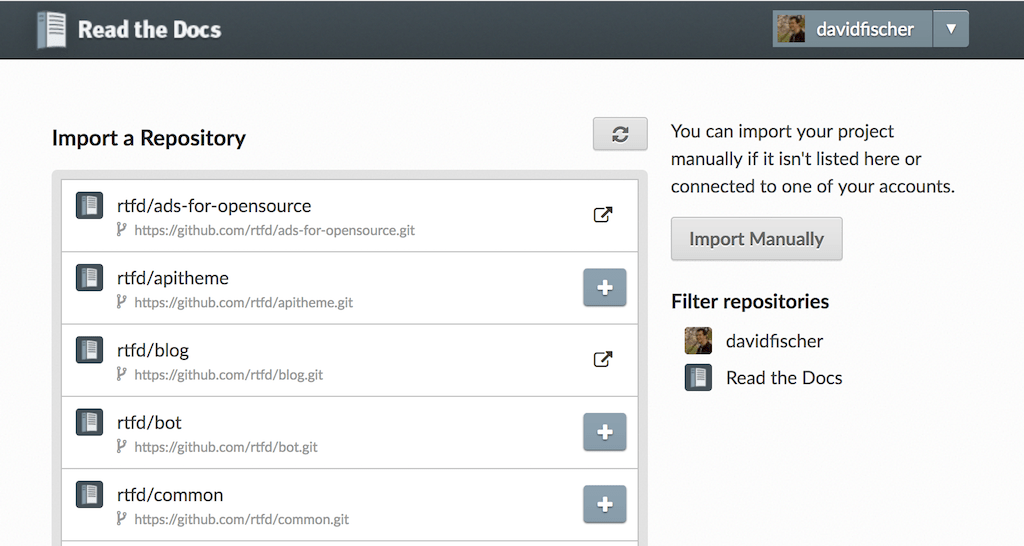

Importing the project to Read the Docs¶

To import your GitHub project to Read the Docs, first click on the Import a Project button on your dashboard (or browse to the import page directly). You should see your GitHub account under the “Filter repositories” list on the right. If the list of repositories is empty, click the 🔄 button, and after that all your repositories will appear on the center.

Import projects workflow¶

Locate your rtd-tutorial project

(possibly clicking next ›› at the bottom if you have several pages of projects),

and then click on the ➕ button to the right of the name.

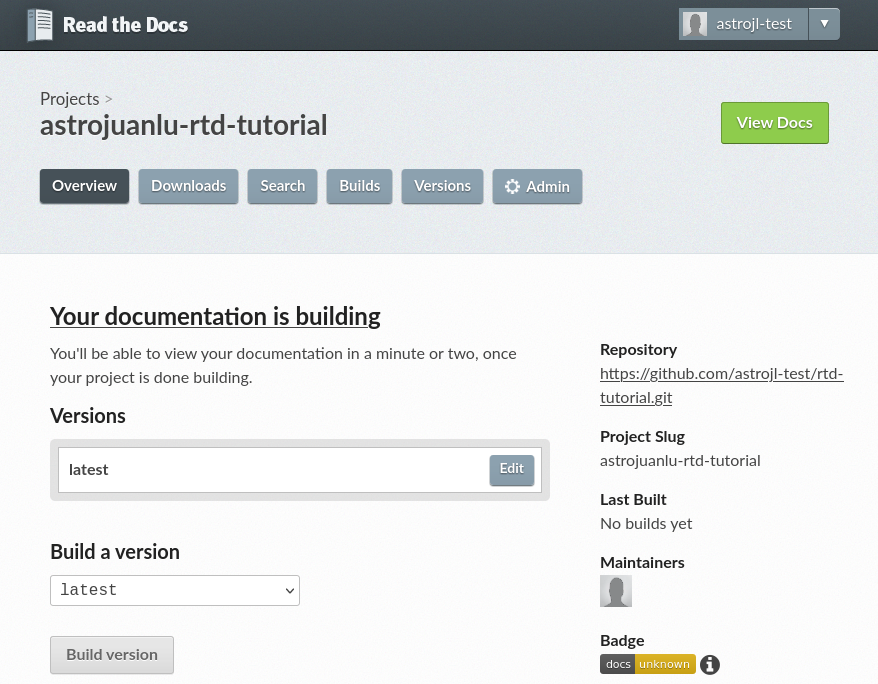

The next page will ask you to fill some details about your Read the Docs project:

- Name

The name of the project. It has to be unique across all the service, so it is better if you prepend your username, for example

{username}-rtd-tutorial.- Repository URL

The URL that contains the sources. Leave the automatically filled value.

- Repository type

Version control system used, leave it as “Git”.

- Default branch

Name of the default branch of the project, leave it as

main.- Edit advanced project options

Leave it unchecked, we will make some changes later.

After hitting the Next button, you will be redirected to the project home. You just created your first project on Read the Docs! 🎉

Project home¶

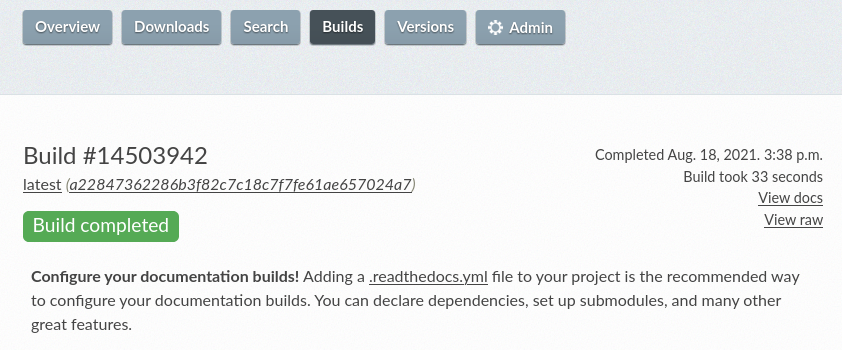

Checking the first build¶

Read the Docs will try to build the documentation of your project right after you create it. To see the build logs, click on the Your documentation is building link on the project home, or alternatively navigate to the “Builds” page, then open the one on top (the most recent one).

If the build has not finished yet by the time you open it, you will see a spinner next to a “Installing” or “Building” indicator, meaning that it is still in progress.

First successful documentation build¶

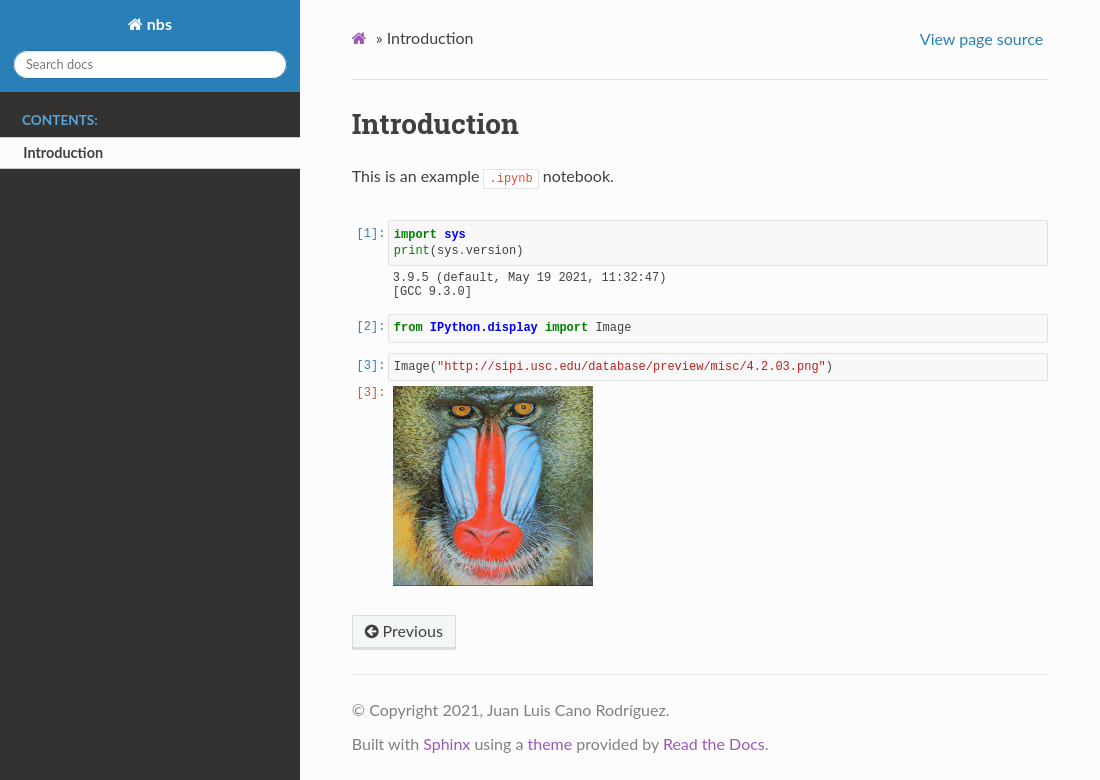

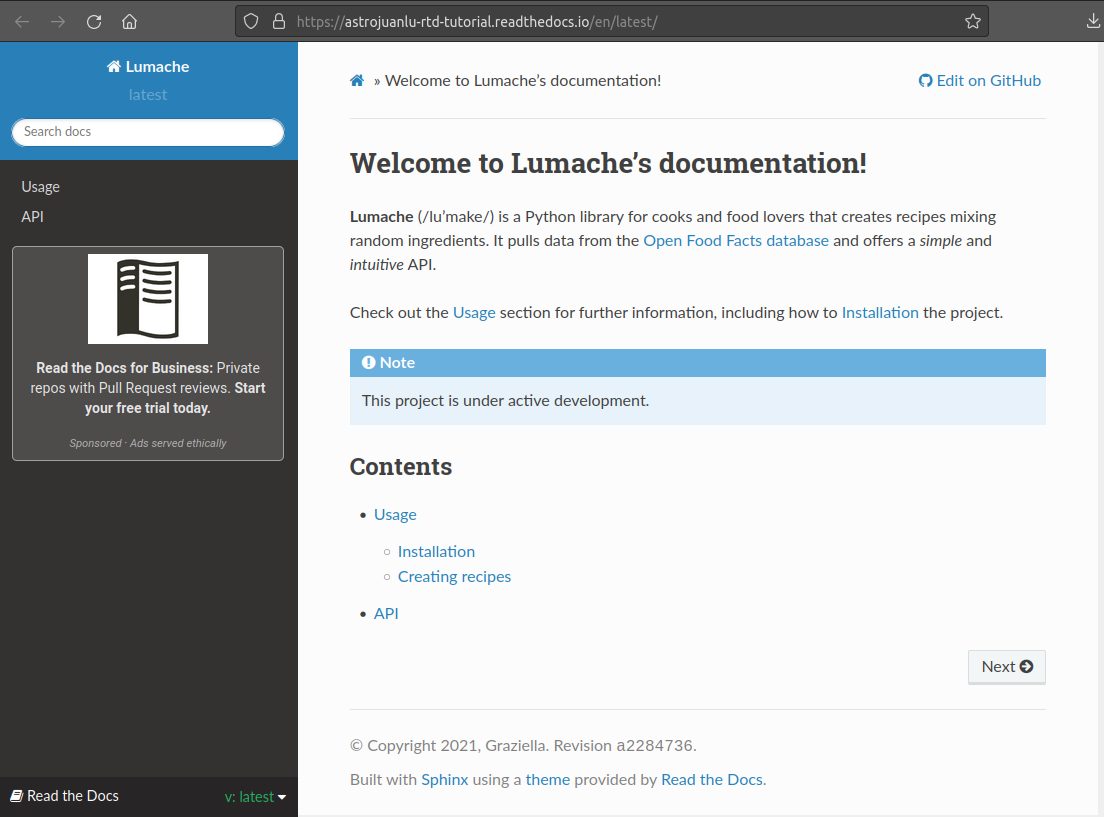

When the build finishes, you will see a green “Build completed” indicator, the completion date, the elapsed time, and a link to see the corresponding documentation. If you now click on View docs, you will see your documentation live!

HTML documentation live on Read the Docs¶

Note

Advertisement is one of our main sources of revenue. If you want to learn more about how do we fund our operations and explore options to go ad-free, check out our Sustainability page.

If you don’t see the ad, you might be using an ad blocker. Our EthicalAds network respects your privacy, doesn’t target you, and tries to be as unobstrusive as possible, so we would like to kindly ask you to not block us ❤️

Basic configuration changes¶

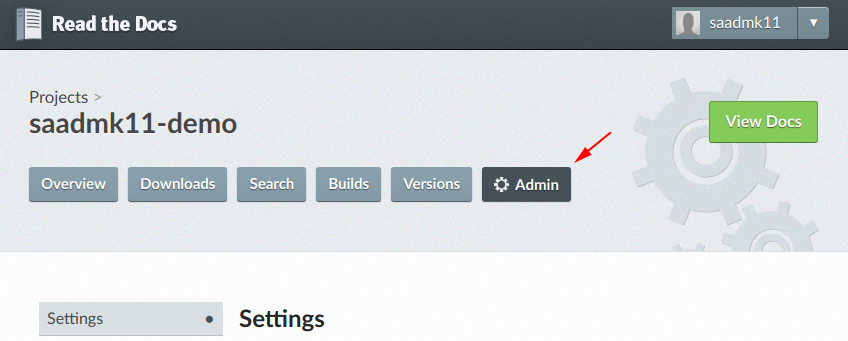

You can now proceed to make some basic configuration adjustments. Navigate back to the project page and click on the ⚙ Admin button, which will open the Settings page.

First of all, add the following text in the description:

Lumache (/lu’make/) is a Python library for cooks and food lovers that creates recipes mixing random ingredients.

Then set the project homepage to https://world.openfoodfacts.org/,

and write food, python in the list of tags.

All this information will be shown on your project home.

After that, configure your email so you get a notification if the build fails. To do so, click on the Notifications link on the left, type the email where you would like to get the notification, and click the Add button. After that, your email will be shown under “Existing Notifications”.

Trigger a build from a pull request¶

Read the Docs allows you to trigger builds from GitHub pull requests and gives you a preview of how the documentation would look like with those changes.

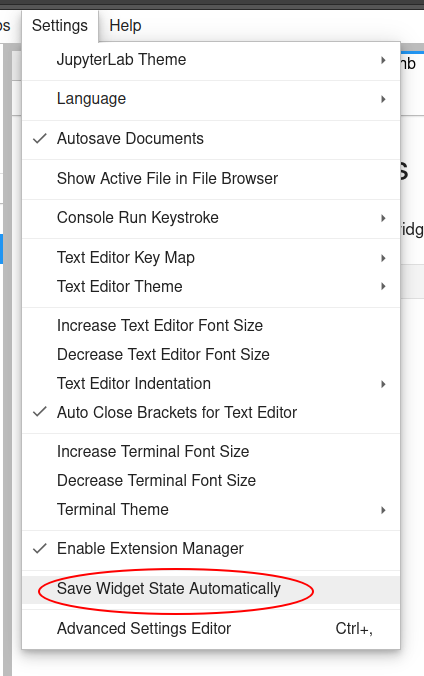

To enable that functionality, first click on the Advanced Settings link on the left under the ⚙ Admin menu, check the “Build pull requests for this project” checkbox, and click the Save button at the bottom of the page.

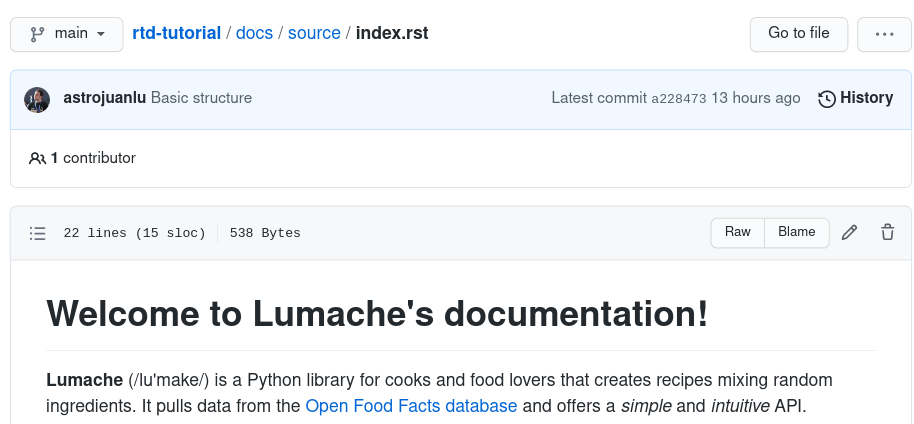

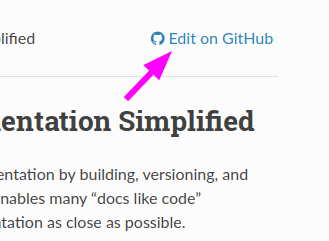

Next, navigate to your GitHub repository, locate the file docs/source/index.rst,

and click on the ✏️ icon on the top-right with the tooltip “Edit this file”

to open a web editor (more information on their documentation).

File view on GitHub before launching the editor¶

In the editor, add the following sentence to the file:

Lumache has its documentation hosted on Read the Docs.

Write an appropriate commit message, and choose the “Create a new branch for this commit and start a pull request” option, typing a name for the new branch. When you are done, click the green Propose changes button, which will take you to the new pull request page, and there click the Create pull request button below the description.

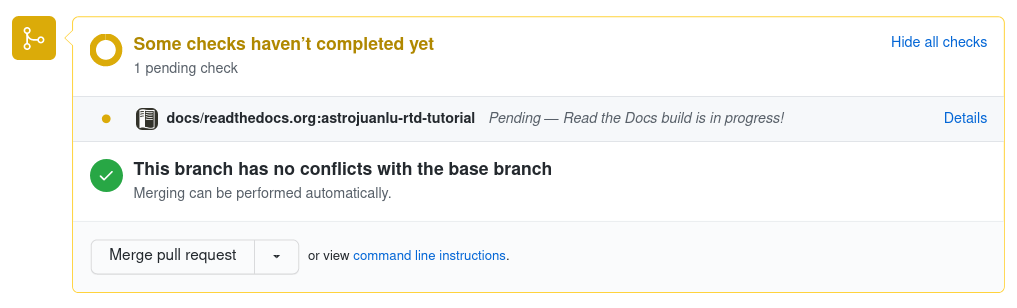

Read the Docs building the pull request from GitHub¶

After opening the pull request, a Read the Docs check will appear indicating that it is building the documentation for that pull request. If you click on the Details link while it is building, you will access the build logs, otherwise it will take you directly to the documentation. When you are satisfied, you can merge the pull request!

Customizing the build process¶

The Settings page of the project home allows you

to change some global configuration values of your project.

In addition, you can further customize the building process

using the .readthedocs.yaml configuration file.

This has several advantages:

The configuration lives next to your code and documentation, tracked by version control.

It can be different for every version (more on versioning in the next section).

Some configurations are only available using the config file.

Read the Docs works without this configuration by making some decisions on your behalf. For example, what Python version to use, how to install the requirements, and others.

Tip

Settings that apply to the entire project are controlled in the web dashboard, while settings that are version or build specific are better in the YAML file.

Upgrading the Python version¶

For example, to explicitly use Python 3.8 to build your project,

navigate to your GitHub repository, click on the Add file button,

and add a .readthedocs.yaml file with these contents to the root of your project:

version: 2

build:

os: "ubuntu-20.04"

tools:

python: "3.8"

The purpose of each key is:

versionMandatory, specifies version 2 of the configuration file.

build.osRequired to specify the Python version, states the name of the base image.

build.tools.pythonDeclares the Python version to be used.

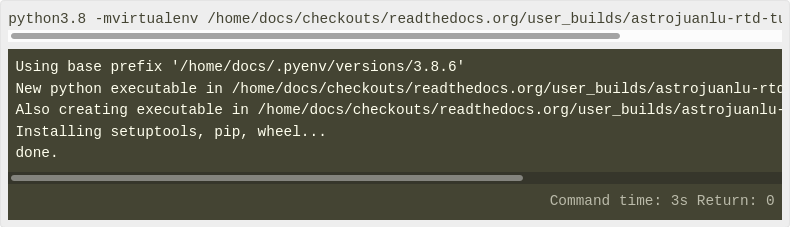

After you commit these changes, go back to your project home,

navigate to the “Builds” page, and open the new build that just started.

You will notice that one of the lines contains python3.8:

if you click on it, you will see the full output of the corresponding command,

stating that it used Python 3.8.6 to create the virtual environment.

Read the Docs build using Python 3.8¶

Making warnings more visible¶

If you navigate to your HTML documentation, you will notice that the index page looks correct, but actually the API section is empty. This is a very common issue with Sphinx, and the reason is stated in the build logs. On the build page you opened before, click on the View raw link on the top right, which opens the build logs in plain text, and you will see several warnings:

WARNING: [autosummary] failed to import 'lumache': no module named lumache

...

WARNING: autodoc: failed to import function 'get_random_ingredients' from module 'lumache'; the following exception was raised:

No module named 'lumache'

WARNING: autodoc: failed to import exception 'InvalidKindError' from module 'lumache'; the following exception was raised:

No module named 'lumache'

To spot these warnings more easily and allow you to address them,

you can add the sphinx.fail_on_warning option to your Read the Docs configuration file.

For that, navigate to GitHub, locate the .readthedocs.yaml file you created earlier,

click on the ✏️ icon, and add these contents:

version: 2

build:

os: "ubuntu-20.04"

tools:

python: "3.8"

sphinx:

fail_on_warning: true

At this point, if you navigate back to your “Builds” page,

you will see a Failed build, which is exactly the intended result:

the Sphinx project is not properly configured yet,

and instead of rendering an empty API page, now the build fails.

The reason sphinx.ext.autosummary and sphinx.ext.autodoc

fail to import the code is because it is not installed.

Luckily, the .readthedocs.yaml also allows you to specify

which requirements to install.

To install the library code of your project,

go back to editing .readthedocs.yaml on GitHub and modify it as follows:

python:

# Install our python package before building the docs

install:

- method: pip

path: .

With this change, Read the Docs will install the Python code

before starting the Sphinx build, which will finish seamlessly.

If you go now to the API page of your HTML documentation,

you will see the lumache summary!

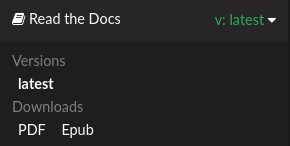

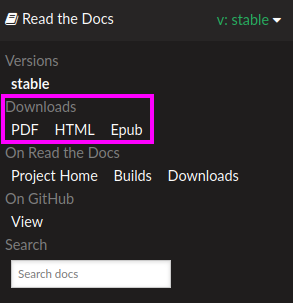

Enabling PDF and EPUB builds¶

Sphinx can build several other formats in addition to HTML, such as PDF and EPUB. You might want to enable these formats for your project so your users can read the documentation offline.

To do so, add this extra content to your .readthedocs.yaml:

sphinx:

fail_on_warning: true

formats:

- pdf

- epub

After this change, PDF and EPUB downloads will be available both from the “Downloads” section of the project home, as well as the flyout menu.

Downloads available from the flyout menu¶

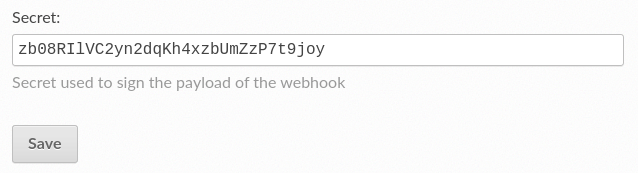

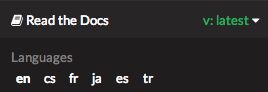

Versioning documentation¶

Read the Docs allows you to have several versions of your documentation,

in the same way that you have several versions of your code.

By default, it creates a latest version

that points to the default branch of your version control system

(main in the case of this tutorial),

and that’s why the URLs of your HTML documentation contain the string /latest/.

Creating a new version¶

Let’s say you want to create a 1.0 version of your code,

with a corresponding 1.0 version of the documentation.

For that, first navigate to your GitHub repository, click on the branch selector,

type 1.0.x, and click on “Create branch: 1.0.x from ‘main’”

(more information on their documentation).

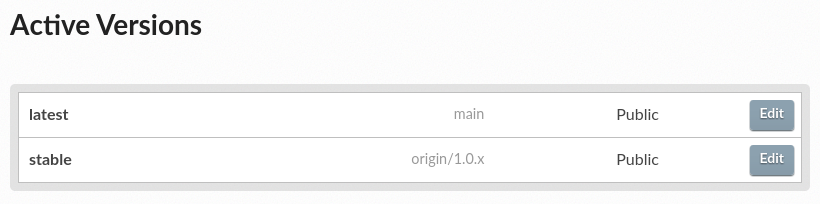

Next, go to your project home, click on the Versions button, and under “Active Versions” you will see two entries:

The

latestversion, pointing to themainbranch.A new

stableversion, pointing to theorigin/1.0.xbranch.

List of active versions of the project¶

Right after you created your branch,

Read the Docs created a new special version called stable pointing to it,

and started building it. When the build finishes,

the stable version will be listed in the flyout menu

and your readers will be able to choose it.

Note

Read the Docs follows some rules

to decide whether to create a stable version pointing to your new branch or tag.

To simplify, it will check if the name resembles a version number

like 1.0, 2.0.3 or 4.x.

Now you might want to set stable as the default version,

rather than latest,

so that users see the stable documentation

when they visit the root URL of your documentation

(while still being able to change the version in the flyout menu).

For that, go to the Advanced Settings link

under the ⚙ Admin menu of your project home,

choose stable in the “Default version*” dropdown,

and hit Save at the bottom.

Done!

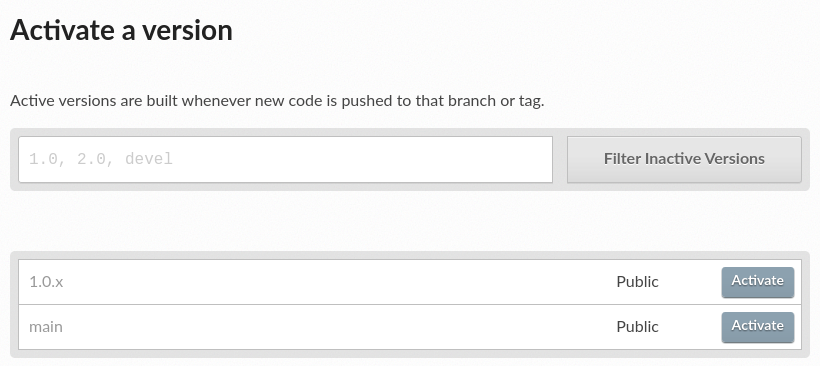

Modifying versions¶

Both latest and stable are now active, which means that

they are visible for users, and new builds can be triggered for them.

In addition to these, Read the Docs also created an inactive 1.0.x

version, which will always point to the 1.0.x branch of your repository.

List of inactive versions of the project¶

Let’s activate the 1.0.x version.

For that, go to the “Versions” on your project home,

locate 1.0.x under “Activate a version”,

and click on the Activate button.

This will take you to a new page with two checkboxes,

“Active” and “Hidden”. Check only “Active”,

and click Save.

After you do this, 1.0.x will appear on the “Active Versions” section,

and a new build will be triggered for it.

Note

You can read more about hidden versions in our documentation.

Show a warning for old versions¶

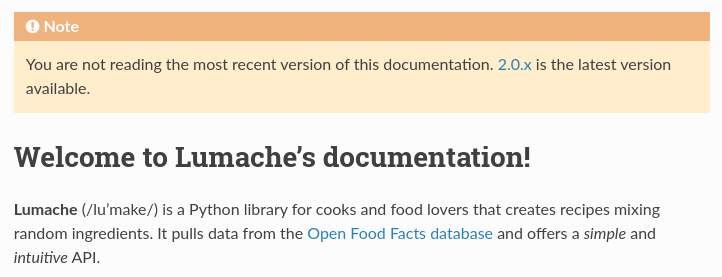

When your project matures, the number of versions might increase. Sometimes you will want to warn your readers when they are browsing an old or outdated version of your documentation.

To showcase how to do that, let’s create a 2.0 version of the code:

navigate to your GitHub repository, click on the branch selector,

type 2.0.x, and click on “Create branch: 2.0.x from ‘main’”.

This will trigger two things:

Since

2.0.xis your newest branch,stablewill switch to tracking it.A new

2.0.xversion will be created on your Read the Docs project.Since you already have an active

stableversion,2.0.xwill be activated.

From this point, 1.0.x version is no longer the most up to date one.

To display a warning to your readers, go to the ⚙ Admin menu of your project home,

click on the Advanced Settings link on the left,

enable the “Show version warning” checkbox, and click the Save button.

If you now browse the 1.0.x documentation, you will see a warning on top

encouraging you to browse the latest version instead. Neat!

Warning for old versions¶

Getting insights from your projects¶

Once your project is up and running, you will probably want to understand how readers are using your documentation, addressing some common questions like:

what pages are the most visited pages?

what search terms are the most frequently used?

are readers finding what they look for?

Read the Docs offers you some analytics tools to find out the answers.

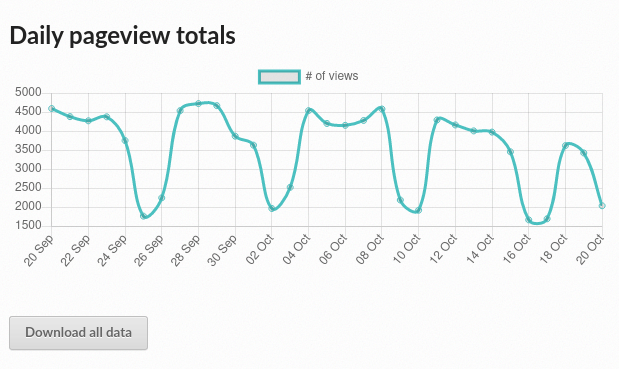

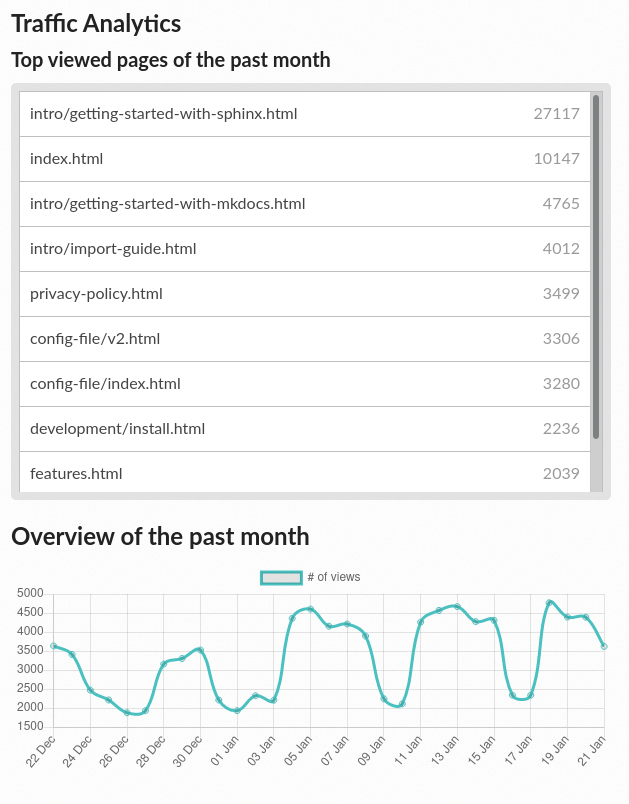

Browsing Traffic Analytics¶

The Traffic Analytics view shows the top viewed documentation pages of the past 30 days, plus a visualization of the daily views during that period. To generate some artificial views on your newly created project, you can first click around the different pages of your project, which will be accounted immediately for the current day statistics.

To see the Traffic Analytics view, go back the project page again, click on the ⚙ Admin button, and then click on the Traffic Analytics section. You will see the list of pages in descending order of visits, as well as a plot similar to the one below.

Traffic Analytics plot¶

Note

The Traffic Analytics view explained above gives you a simple overview of how your readers browse your documentation. It has the advantage that it stores no identifying information about your visitors, and therefore it respects their privacy. However, you might want to get more detailed data by enabling Google Analytics. Notice though that we take some extra measures to respect user privacy when they visit projects that have Google Analytics enabled, and this might reduce the number of visits counted.

Finally, you can also download this data for closer inspection. To do that, scroll to the bottom of the page and click on the Download all data button. That will prompt you to download a CSV file that you can process any way you want.

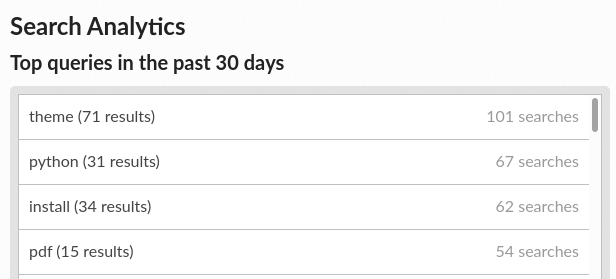

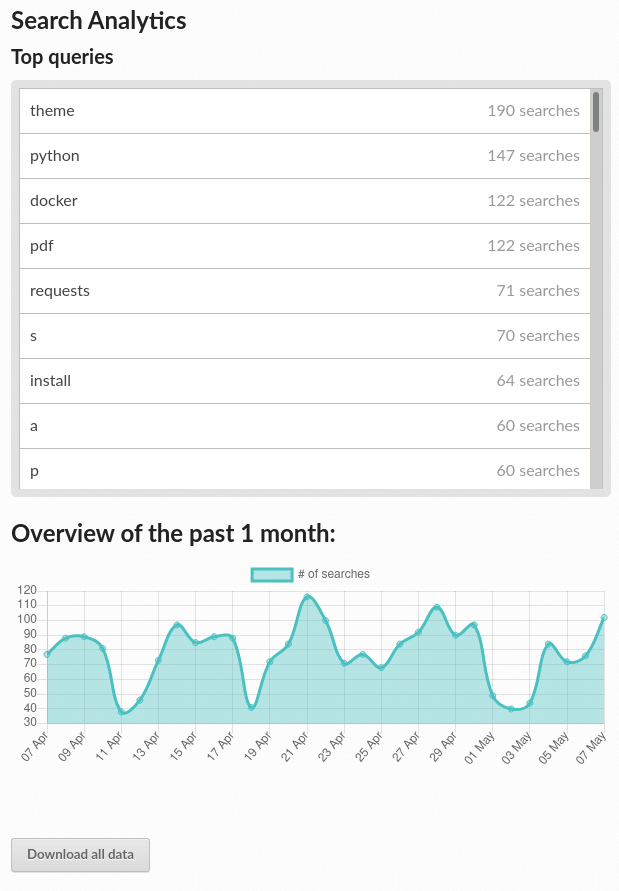

Browsing Search Analytics¶

Apart from traffic analytics, Read the Docs also offers the possibility to inspect what search terms your readers use on your documentation. This can inform decisions on what areas to reinforce, or what parts of your project are less understood or more difficult to find.

To generate some artificial search statistics on the project,

go to the HTML documentation, locate the Sphinx search box on the left,

type ingredients, and press the Enter key.

You will be redirected to the search results page, which will show two entries.

Next, go back to the ⚙ Admin section of your project page,

and then click on the Search Analytics section.

You will see a table with the most searched queries

(including the ingredients one you just typed),

how many results did each query return, and how many times it was searched.

Below the queries table, you will also see a visualization

of the daily number of search queries during the past 30 days.

Most searched terms¶

Like the Traffic Analytics, you can also download the whole dataset in CSV format by clicking on the Download all data button.

Where to go from here¶

This is the end of the tutorial. You started by forking a GitHub repository and importing it on Read the Docs, building its HTML documentation, and then went through a series of steps to customize the build process, tweak the project configuration, and add new versions.

Here you have some resources to continue learning about documentation and Read the Docs:

You can learn more about the functionality of the platform by going over our Main Features page.

To make the most of the documentation generators that are supported, you can read the Sphinx tutorial or the MkDocs User Guide.

Display example projects and read the source code in Example projects.

Whether you are a documentation author, a project administrator, a developer, or a designer, you can follow our how-to guides that cover specific tasks, available under Guides.

You can check out some of the Advanced features of Read the Docs, like Subprojects or Automation Rules, to name a few.

For private project support and other enterprise features, you can use our commercial service (and if in doubt, check out Choosing Between Our Two Platforms).

Do you want to join a global community of fellow

documentarians? Check out Write the Docs and its Slack workspace.Do you want to contribute to Read the Docs? We greatly appreciate it! Check out Contributing to Read the Docs.

Happy documenting!

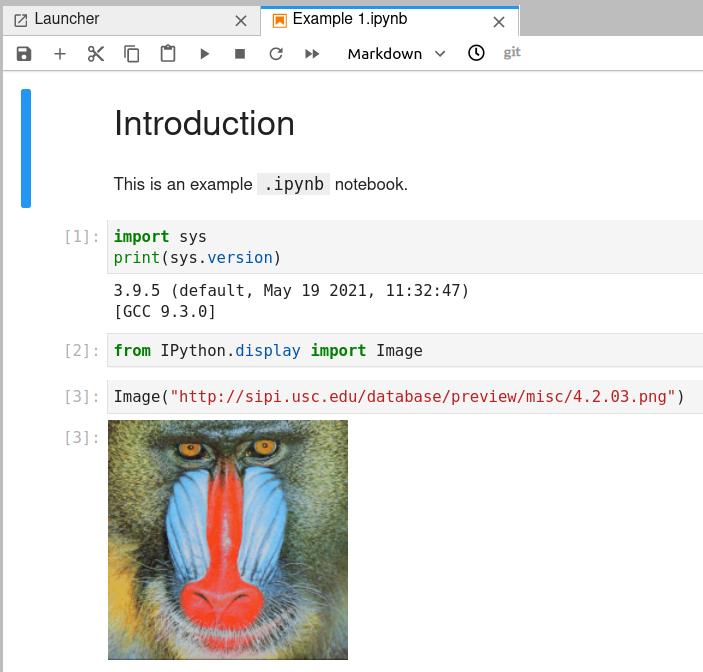

Getting Started with Sphinx¶

Sphinx is a powerful documentation generator that has many great features for writing technical documentation including:

Generate web pages, printable PDFs, documents for e-readers (ePub), and more all from the same sources

You can use reStructuredText or Markdown to write documentation

An extensive system of cross-referencing code and documentation

Syntax highlighted code samples

A vibrant ecosystem of first and third-party extensions

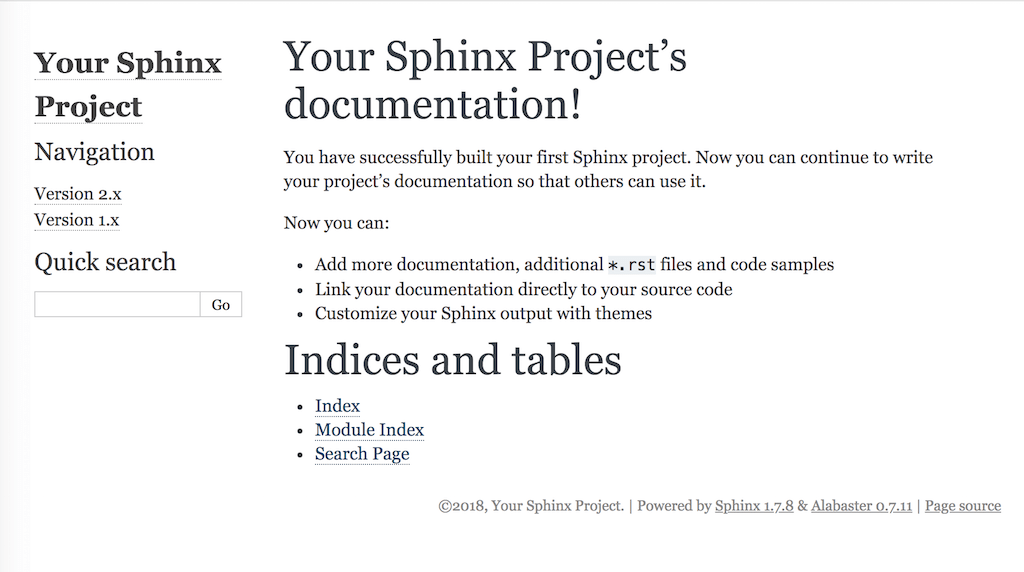

If you want to learn more about how to create your first Sphinx project, read on. If you are interested in exploring the Read the Docs platform using an already existing Sphinx project, check out Read the Docs tutorial.

Quick start¶

See also

If you already have a Sphinx project, check out our Importing Your Documentation guide.

Assuming you have Python already, install Sphinx:

pip install sphinx

Create a directory inside your project to hold your docs:

cd /path/to/project

mkdir docs

Run sphinx-quickstart in there:

cd docs

sphinx-quickstart

This quick start will walk you through creating the basic configuration; in most cases, you

can just accept the defaults. When it’s done, you’ll have an index.rst, a

conf.py and some other files. Add these to revision control.

Now, edit your index.rst and add some information about your project.

Include as much detail as you like (refer to the reStructuredText syntax

or this template if you need help). Build them to see how they look:

make html

Your index.rst has been built into index.html

in your documentation output directory (typically _build/html/index.html).

Open this file in your web browser to see your docs.

Your Sphinx project is built¶

Edit your files and rebuild until you like what you see, then commit your changes and push to your public repository. Once you have Sphinx documentation in a public repository, you can start using Read the Docs by importing your docs.

Warning

We strongly recommend to pin the Sphinx version used for your project to build the docs to avoid potential future incompatibilities.

Using Markdown with Sphinx¶

You can use Markdown using MyST and reStructuredText in the same Sphinx project. We support this natively on Read the Docs, and you can do it locally:

pip install myst-parser

Then in your conf.py:

extensions = ['myst_parser']

You can now continue writing your docs in .md files and it will work with Sphinx.

Read the Getting started with MyST in Sphinx docs for additional instructions.

Get inspired!¶

You might learn more and find the first ingredients for starting your own documentation project by looking at Example projects - view live example renditions and copy & paste from the accompanying source code.

External resources¶

Here are some external resources to help you learn more about Sphinx.

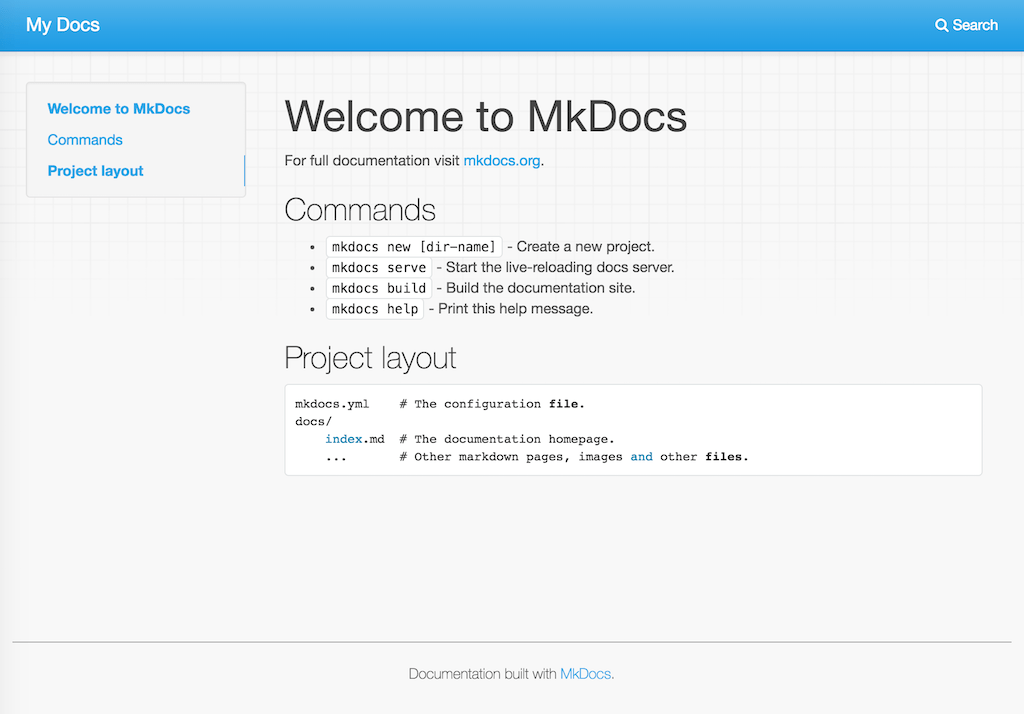

Getting Started with MkDocs¶

MkDocs is a documentation generator that focuses on speed and simplicity. It has many great features including:

Preview your documentation as you write it

Easy customization with themes and extensions

Writing documentation with Markdown

Note

MkDocs is a great choice for building technical documentation. However, Read the Docs also supports Sphinx, another tool for writing and building documentation.

Quick start¶

See also

If you already have a Mkdocs project, check out our Importing Your Documentation guide.

Assuming you have Python already, install MkDocs:

pip install mkdocs

Setup your MkDocs project:

mkdocs new .

This command creates mkdocs.yml which holds your MkDocs configuration,

and docs/index.md which is the Markdown file

that is the entry point for your documentation.

You can edit this index.md file to add more details about your project

and then you can build your documentation:

mkdocs serve

This command builds your Markdown files into HTML and starts a development server to browse your documentation. Open up http://127.0.0.1:8000/ in your web browser to see your documentation. You can make changes to your Markdown files and your docs will automatically rebuild.

Your MkDocs project is built¶

Once you have your documentation in a public repository such as GitHub, Bitbucket, or GitLab, you can start using Read the Docs by importing your docs.

Warning

We strongly recommend to pin the MkDocs version used for your project to build the docs to avoid potential future incompatibilities.

Get inspired!¶

You might learn more and find the first ingredients for starting your own documentation project by looking at Example projects - view live example renditions and copy & paste from the accompanying source code.

External resources¶

Here are some external resources to help you learn more about MkDocs.

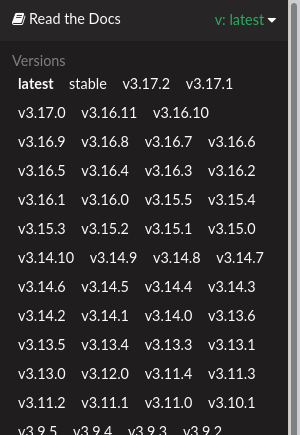

Importing Your Documentation¶

To import a public documentation repository, visit your Read the Docs dashboard and click Import. For private repositories, please use Read the Docs for Business.

Automatically import your docs¶

If you have connected your Read the Docs account to GitHub, Bitbucket, or GitLab, you will see a list of your repositories that we are able to import. To import one of these projects, just click the import icon next to the repository you’d like to import. This will bring up a form that is already filled with your project’s information. Feel free to edit any of these properties, and then click Next to build your documentation.

Importing a repository¶

Manually import your docs¶

If you do not have a connected account, you will need to select Import Manually and enter the information for your repository yourself. You will also need to manually configure the webhook for your repository as well. When importing your project, you will be asked for the repository URL, along with some other information for your new project. The URL is normally the URL or path name you’d use to checkout, clone, or branch your repository. Some examples:

Git:

https://github.com/ericholscher/django-kong.gitMercurial:

https://bitbucket.org/ianb/pipSubversion:

http://varnish-cache.org/svn/trunkBazaar:

lp:pasta

Add an optional homepage URL and some tags, and then click Next.

Once your project is created, you’ll need to manually configure the repository webhook if you would like to have new changes trigger builds for your project on Read the Docs. Go to your project’s Admin > Integrations page to configure a new webhook, or see our steps for webhook creation for more information on this process.

Note

The Admin page can be found at https://readthedocs.org/dashboard/<project-slug>/edit/.

You can access all of the project settings from the admin page sidebar.

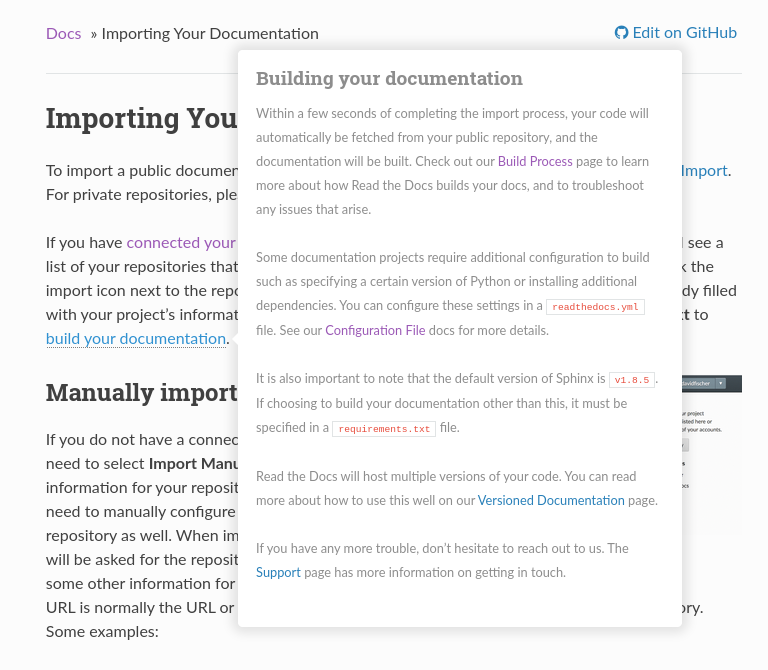

Building your documentation¶

Within a few seconds of completing the import process, your code will automatically be fetched from your repository, and the documentation will be built. Check out our Build process page to learn more about how Read the Docs builds your docs, and to troubleshoot any issues that arise.

Some documentation projects require additional configuration to build

such as specifying a certain version of Python or installing additional dependencies.

You can configure these settings in a .readthedocs.yaml file.

See our Configuration File docs for more details.

It is also important to note that the default version of Sphinx is v1.8.5.

We recommend to set the version your project uses explicitily.

Read the Docs will host multiple versions of your code. You can read more about how to use this well on our Versioned Documentation page.

If you have any more trouble, don’t hesitate to reach out to us. The Site Support page has more information on getting in touch.

Choosing Between Our Two Platforms¶

Users often ask what the differences are between Read the Docs Community and Read the Docs for Business.

While many of our features are available on both of these platforms, there are some key differences between our two platforms.

Read the Docs Community¶

Read the Docs Community is exclusively for hosting open source documentation. We support open source communities by providing free documentation building and hosting services, for projects of all sizes.

Important points:

Open source project hosting is always free

All documentation sites include advertising

Only supports public VCS repositories

All documentation is publicly accessible to the world

Less build time and fewer build resources (memory & CPU)

Email support included only for issues with our platform

Documentation is organized by projects

You can sign up for an account at https://readthedocs.org.

Read the Docs for Business¶

Read the Docs for Business is meant for companies and users who have more complex requirements for their documentation project. This can include commercial projects with private source code, projects that can only be viewed with authentication, and even large scale projects that are publicly available.

Important points:

Hosting plans require a paid subscription plan

There is no advertising on documentation sites

Allows importing private and public repositories from VCS

Supports private versions that require authentication to view

Supports team authentication, including SSO with Google, GitHub, GitLab, and Bitbucket

More build time and more build resources (memory & CPU)

Includes 24x5 email support, with 24x7 SLA support available

Documentation is organized by organization, giving more control over permissions

You can sign up for an account at https://readthedocs.com.

Questions?¶

If you have a question about which platform would be best, email us at support@readthedocs.org.

Read the Docs feature overview¶

Learn more about configuring your automated documentation builds and some of the core features of Read the Docs.

Overview of core features: Main Features | VCS Integrations | Custom Domains | Versioned Documentation | Downloadable Documentation | Documentation Hosting Features | Server Side Search | Traffic Analytics | Preview Documentation from Pull Requests | Build Notifications and Webhooks | User-defined Redirects | Security Log

Connecting with GitHub, BitBucket, or GitLab: Connecting your VCS account

Read the Docs build process: Configuration reference | Build process | Build customization | Environment Variables | Badges

Troubleshooting: Site Support | Frequently asked questions

Main Features¶

Read the Docs offers a number of platform features that are possible because we both build and host documentation for you.

Automatic Documentation Deployment¶

We integrate with GitHub, BitBucket, and GitLab. We automatically create webhooks in your repository, which tell us whenever you push a commit. We will then build and deploy your docs every time you push a commit. This enables a workflow that we call Continuous Documentation:

Once you set up your Read the Docs project, your users will always have up-to-date documentation.

Learn more about VCS Integrations.

Custom Domains & White Labeling¶

When you import a project to Read the Docs, we assign you a URL based on your project name. You are welcome to use this URL, but we also fully support custom domains for all our documentation projects.

Learn more about Custom Domains.

Versioned Documentation¶

We support multiple versions of your documentation, so that users can find the exact docs for the version they are using. We build this on top of the version control system that you’re already using. Each version on Read the Docs is just a tag or branch in your repository.

You don’t need to change how you version your code, we work with whatever process you are already using. If you don’t have a process, we can recommend one.

Learn more about Versioned Documentation.

Downloadable Documentation¶

Read the Docs supports building multiple formats for Sphinx-based projects:

PDF

ePub

Zipped HTML

This means that every commit that you push will automatically update your PDFs as well as your HTML.

This feature is great for users who are about to get on a plane and want offline docs, as well as being able to ship your entire set of documentation as one file.

Learn more about Downloadable Documentation.

Full-Text Search¶

We provide search across all the projects that we host. This actually comes in two different search experiences: dashboard search on the Read the Docs dashboard and in-doc search on documentation sites, using your own theme and our search results.

We offer a number of search features:

Search across subprojects

Search results land on the exact content you were looking for

Search across projects you have access to (available on Read the Docs for Business)

A full range of search operators including exact matching and excluding phrases.

Learn more about Server Side Search.

Open Source and Customer Focused¶

Read the Docs cares deeply about our customers and our community. As part of that commitment, all of the source code for Read the Docs is open source. This means there’s no vendor lock-in, and you are welcome to contribute the features you want or run your own instance.

Our bootstrapped company is owned and controlled by the founders, and fully funded by our customers and advertisers. That allows us to focus 100% of our attention on building the best possible product for you.

Learn more About Read the Docs.

Configuration File¶

In addition to using the admin panel of your project to configure your project,

you can use a configuration file in the root of your project.

The configuration file should be named .readthedocs.yaml.

Note

Some other variants like readthedocs.yaml, .readthedocs.yml, etc

are deprecated.

The main advantages of using a configuration file over the web interface are:

Settings are per version rather than per project.

Settings live in your VCS.

They enable reproducible build environments over time.

Some settings are only available using a configuration file

Tip

Using a configuration file is the recommended way of using Read the Docs.

Configuration File V2¶

Read the Docs supports configuring your documentation builds with a YAML file.

The configuration file must be in the root directory of your project

and be named .readthedocs.yaml.

All options are applied to the version containing this file. Below is an example YAML file which shows the most common configuration options:

# .readthedocs.yaml

# Read the Docs configuration file

# See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

# Required

version: 2

# Set the version of Python and other tools you might need

build:

os: ubuntu-20.04

tools:

python: "3.9"

# You can also specify other tool versions:

# nodejs: "16"

# rust: "1.55"

# golang: "1.17"

# Build documentation in the docs/ directory with Sphinx

sphinx:

configuration: docs/conf.py

# If using Sphinx, optionally build your docs in additional formats such as PDF

# formats:

# - pdf

# Optionally declare the Python requirements required to build your docs

python:

install:

- requirements: docs/requirements.txt

# .readthedocs.yaml

# Read the Docs configuration file

# See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

# Required

version: 2

# Set the version of Python and other tools you might need

build:

os: ubuntu-20.04

tools:

python: "3.9"

mkdocs:

configuration: mkdocs.yml

# Optionally declare the Python requirements required to build your docs

python:

install:

- requirements: docs/requirements.txt

Supported settings¶

Read the Docs validates every configuration file. Any configuration option that isn’t supported will make the build fail. This is to avoid typos and provide feedback on invalid configurations.

Warning

When using a v2 configuration file, the local settings from the web interface are ignored.

version¶

- Required

true

Example:

version: 2

Warning

If you don’t provide the version, v1 will be used.

formats¶

Additional formats of the documentation to be built, apart from the default HTML.

- Type

list- Options

htmlzip,pdf,epub,all- Default

[]

Example:

version: 2

# Default

formats: []

version: 2

# Build PDF & ePub

formats:

- epub

- pdf

Note

You can use the all keyword to indicate all formats.

version: 2

# Build all formats

formats: all

Warning

At the moment, only Sphinx supports additional formats.

pdf, epub, and htmlzip output is not yet supported when using MkDocs.

python¶

Configuration of the Python environment to be used.

version: 2

python:

install:

- requirements: docs/requirements.txt

- method: pip

path: .

extra_requirements:

- docs

- method: setuptools

path: another/package

system_packages: true

Warning

This option is now deprecated and replaced by build.tools.python. See python.version (legacy) for the description of this option.

List of installation methods of packages and requirements. You can have several of the following methods.

- Type

list- Default

[]

Install packages from a requirements file.

The path to the requirements file, relative to the root of the project.

- Key

requirements- Type

path- Required

true

Example:

version: 2

python:

version: "3.7"

install:

- requirements: docs/requirements.txt

- requirements: requirements.txt

Warning

If you are using a Conda environment to

manage the build, this setting will not have any effect. Instead

add the extra requirements to the environment file of Conda.

Install the project using python setup.py install or pip install.

The path to the package, relative to the root of the project.

- Key

path- Type

path- Required

true

The installation method.

- Key

method- Options

pip,setuptools- Default

pip

Extra requirements section to install in addition to the package dependencies.

Warning

You need to install your project with pip to use extra_requirements.

- Key

extra_requirements- Type

list- Default

[]

Example:

version: 2

python:

version: "3.7"

install:

- method: pip

path: .

extra_requirements:

- docs

- method: setuptools

path: package

With the previous settings, Read the Docs will execute the next commands:

pip install .[docs]

python package/setup.py install

Give the virtual environment access to the global site-packages directory.

- Type

bool- Default

false

Warning

If you are using a Conda environment to manage the build, this setting will not have any effect, since the virtual environment creation is managed by Conda.

conda¶

Configuration for Conda support.

version: 2

conda:

environment: environment.yml

The path to the Conda environment file, relative to the root of the project.

- Type

path- Required

true

build¶

Configuration for the documentation build process. This allows you to specify the base Read the Docs image used to build the documentation, and control the versions of several tools: Python, Node.js, Rust, and Go.

version: 2

build:

os: ubuntu-20.04

tools:

python: "3.9"

nodejs: "16"

rust: "1.55"

golang: "1.17"

The Docker image used for building the docs. Image names refer to the operating system Read the Docs uses to build them.

Note

Arbitrary Docker images are not supported.

- Type

string- Options

ubuntu-20.04,ubuntu-22.04- Required

true

Version specifiers for each tool. It must contain at least one tool.

- Type

dict- Options

python,nodejs,rust,golang- Required

true

Python version to use. You can use several interpreters and versions, from CPython, PyPy, Miniconda, and Mamba.

Note

If you use Miniconda3 or Mambaforge, you can select the Python version

using the environment.yml file. See our Conda Support guide

for more information.

- Type

string- Options

2.73(last stable CPython version)3.63.73.83.93.103.11pypy3.7pypy3.8pypy3.9miniconda3-4.7mambaforge-4.10

Node.js version to use.

- Type

string- Options

141618

Rust version to use.

- Type

string- Options

1.551.61

Go version to use.

- Type

string- Options

1.171.18

List of APT packages to install. Our build servers run Ubuntu 18.04, with the default set of package repositories installed. We don’t currently support PPA’s or other custom repositories.

- Type

list- Default

[]

version: 2

build:

apt_packages:

- libclang

- cmake

Note

When possible avoid installing Python packages using apt (python3-numpy for example),

use pip or Conda instead.

Commands to be run before or after a Read the Docs pre-defined build jobs. This allows you to run custom commands at a particular moment in the build process. See Build customization for more details.

version: 2

build:

os: ubuntu-22.04

tools:

python: "3.10"

jobs:

pre_create_environment:

- echo "Command run at 'pre_create_environment' step"

post_build:

- echo "Command run at 'post_build' step"

- echo `date`

Note

Each key under build.jobs must be a list of strings.

build.os and build.tools are also required to use build.jobs.

- Type

dict- Allowed keys

post_checkout,pre_system_dependencies,post_system_dependencies,pre_create_environment,post_create_environment,pre_install,post_install,pre_build,post_build- Required

false- Default

{}

Specify a list of commands that Read the Docs will run on the build process.

When build.commands is used, none of the pre-defined build jobs will be executed.

(see Build customization for more details).

This allows you to run custom commands and control the build process completely.

The _readthedocs/html directory (relative to the checkout’s path) will be uploaded and hosted by Read the Docs.

Warning

This feature is in a beta phase and could suffer incompatible changes or even removed completely in the near feature. It does not yet support some of the Read the Docs’ integrations like the flyout menu, search and ads. However, integrating all of them is part of the plan. Use it under your own responsibility.

version: 2

build:

os: ubuntu-22.04

tools:

python: "3.10"

commands:

- pip install pelican

- pelican --settings docs/pelicanconf.py --output _readthedocs/html/ docs/

Note

build.os and build.tools are also required when using build.commands.

- Type

list- Required

false- Default

[]

sphinx¶

Configuration for Sphinx documentation (this is the default documentation type).

version: 2

sphinx:

builder: html

configuration: conf.py

fail_on_warning: true

Note

If you want to pin Sphinx to a specific version,

use a requirements.txt or environment.yml file

(see Requirements file and conda.environment).

If you are using a metadata file to describe code dependencies

like setup.py, pyproject.toml, or similar,

you can use the extra_requirements option

(see Packages).

This also allows you to override the default pinning done by Read the Docs

if your project was created before October 2020.

The builder type for the Sphinx documentation.

- Type

string- Options

html,dirhtml,singlehtml- Default

html

Note

The htmldir builder option was renamed to dirhtml to use the same name as sphinx.

Configurations using the old name will continue working.

The path to the conf.py file, relative to the root of the project.

- Type

path- Default

null

If the value is null,

Read the Docs will try to find a conf.py file in your project.

Turn warnings into errors

(-W and --keep-going options).

This means the build fails if there is a warning and exits with exit status 1.

- Type

bool- Default

false

mkdocs¶

Configuration for MkDocs documentation.

version: 2

mkdocs:

configuration: mkdocs.yml

fail_on_warning: false

Note

If you want to pin MkDocs to a specific version,

use a requirements.txt or environment.yml file

(see Requirements file and conda.environment).

If you are using a metadata file to describe code dependencies

like setup.py, pyproject.toml, or similar,

you can use the extra_requirements option

(see Packages).

This also allows you to override the default pinning done by Read the Docs

if your project was created before March 2021.

The path to the mkdocs.yml file, relative to the root of the project.

- Type

path- Default

null

If the value is null,

Read the Docs will try to find a mkdocs.yml file in your project.

Turn warnings into errors. This means that the build stops at the first warning and exits with exit status 1.

- Type

bool- Default

false

submodules¶

VCS submodules configuration.

Note

Only Git is supported at the moment.

Warning

You can’t use include and exclude settings for submodules at the same time.

version: 2

submodules:

include:

- one

- two

recursive: true

List of submodules to be included.

- Type

list- Default

[]

Note

You can use the all keyword to include all submodules.

version: 2

submodules:

include: all

List of submodules to be excluded.

- Type

list- Default

[]

Note

You can use the all keyword to exclude all submodules.

This is the same as include: [].

version: 2

submodules:

exclude: all

Do a recursive clone of the submodules.

- Type

bool- Default

false

Note

This is ignored if there aren’t submodules to clone.

search¶

Settings for more control over Server Side Search.

version: 2

search:

ranking:

api/v1/*: -1

api/v2/*: 4

ignore:

- 404.html

Set a custom search rank over pages matching a pattern.

- Type

mapof patterns to ranks- Default

{}

Patterns are matched against the final html pages produced by the build

(you should try to match index.html, not docs/index.rst).

Patterns can include some special characters:

*matches everything?matches any single character[seq]matches any character inseq

The rank can be an integer number between -10 and 10 (inclusive). Pages with a rank closer to -10 will appear further down the list of results, and pages with a rank closer to 10 will appear higher in the list of results. Note that 0 means normal rank, not no rank.

If you are looking to completely ignore a page, check search.ignore.

version: 2

search:

ranking:

# Match a single file

tutorial.html: 2

# Match all files under the api/v1 directory

api/v1/*: -5

# Match all files that end with tutorial.html

'*/tutorial.html': 3

Note

The final rank will be the last pattern to match the page.

Tip

Is better to decrease the rank of pages you want to deprecate, rather than increasing the rank of the other pages.

Don’t index files matching a pattern. This is, you won’t see search results from these files.

- Type

listof patterns- Default

['search.html', 'search/index.html', '404.html', '404/index.html']

Patterns are matched against the final html pages produced by the build

(you should try to match index.html, not docs/index.rst).

Patterns can include some special characters:

*matches everything?matches any single character[seq]matches any character inseq

version: 2

search:

ignore:

# Ignore a single file

- 404.html

# Ignore all files under the search/ directory

- search/*

# Ignore all files that end with ref.html

- '*/ref.html'

version: 2

search:

ignore:

# Custom files to ignore

- file.html

- api/v1/*

# Defaults

- search.html

- search/index.html

- 404.html

- 404/index.html'

Note

Since Read the Docs fallbacks to the original search engine when no results are found, you may still see search results from ignored pages.

Schema¶

You can see the complete schema here.

Legacy build specification¶

The legacy build specification used a different set of Docker images,

and only allowed you to specify the Python version.

It remains supported for backwards compatibility reasons.

Check out the build above

for an alternative method that is more flexible.

version: 2

build:

image: latest

apt_packages:

- libclang

- cmake

python:

version: "3.7"

The legacy build specification also supports

the apt_packages key described above.

Warning

When using the new specification,

the build.image and python.version options cannot be used.

Doing so will error the build.

build (legacy)¶

The Docker image used for building the docs.

- Type

string- Options

stable,latest- Default

latest

Each image support different Python versions and has different packages installed, as defined here:

The Python version (this depends on build.image (legacy)).

- Type

string- Default

3

Note

Make sure to use quotes (") to make it a string.

We previously supported using numbers here,

but that approach is deprecated.

Warning

If you are using a Conda environment to manage the build, this setting will not have any effect, as the Python version is managed by Conda.

Migrating from v1¶

Changes¶

The version setting is required. See version.

The default value of the formats setting has changed to

[]and it doesn’t include the values from the web interface.The top setting

requirements_filewas moved topython.installand we don’t try to find a requirements file if the option isn’t present. See Requirements file.The setting

conda.filewas renamed toconda.environment. See conda.environment.The

build.imagesetting has been replaced bybuild.os. See build.os. Alternatively, you can use the legacybuild.imagethat now has only two options:latest(default) andstable.The settings

python.setup_py_installandpython.pip_installwere replaced bypython.install. And now it accepts a path to the package. See Packages.The setting

python.use_system_site_packageswas renamed topython.system_packages. See python.system_packages.The build will fail if there are invalid keys (strict mode).

Warning

Some values from the web interface are no longer respected, please see Migrating from the web interface if you have settings there.

New settings¶

Migrating from the web interface¶

This should be pretty straightforward, just go to the Admin > Advanced settings, and find their respective setting in here.

Not all settings in the web interface are per version, but are per project. These settings aren’t supported via the configuration file.

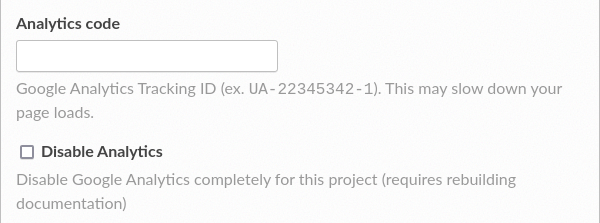

NameRepository URLRepository typeLanguageProgramming languageProject homepageTagsSingle versionDefault branchDefault versionShow versions warningPrivacy levelAnalytics code

VCS Integrations¶

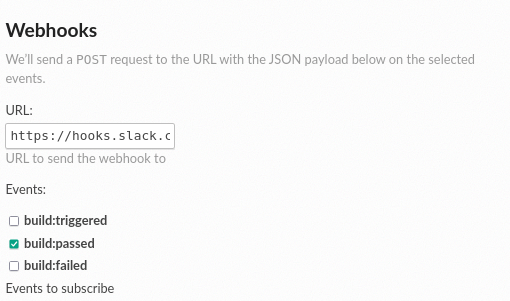

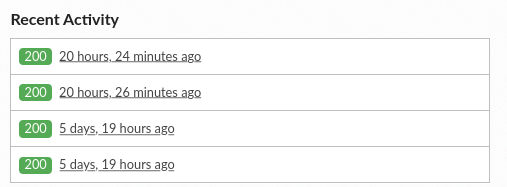

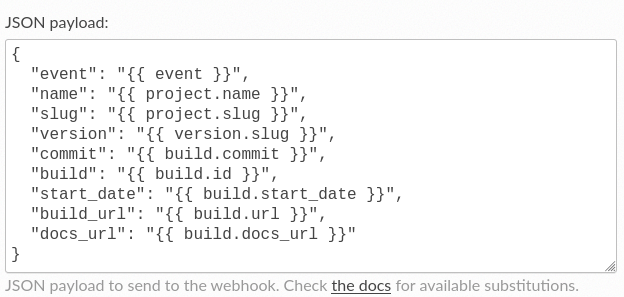

Read the Docs provides integrations with several VCS providers to detect changes to your documentation and versions, mainly using webhooks. Integrations are configured with your repository provider, such as GitHub, Bitbucket or GitLab, and with each change to your repository, Read the Docs is notified. When we receive an integration notification, we determine if the change is related to an active version for your project, and if it is, a build is triggered for that version.

You’ll find a list of configured integrations on your project’s Admin dashboard, under Integrations. You can select any of these integrations to see the integration detail page. This page has additional configuration details and a list of HTTP exchanges that have taken place for the integration, including the Payload URL needed by the repository provider such as GitHub, GitLab, or Bitbucket.

Integration Creation¶

If you have connected your Read the Docs account to GitHub, Bitbucket, or GitLab, an integration will be set up automatically for your repository. However, if your project was not imported through a connected account, you may need to manually configure an integration for your project.

To manually set up an integration, go to Admin > Integrations > Add integration dashboard page and select the integration type you’d like to add. After you have added the integration, you’ll see a link to information about the integration.

As an example, the URL pattern looks like this: https://readthedocs.org/api/v2/webhook/<project-name>/<id>/.

Use this URL when setting up a new integration with your provider – these steps vary depending on the provider.

Note

If your account is connected to the provider, we’ll try to setup the integration automatically. If something fails, you can still setup the integration manually.

GitHub¶

Go to the Settings page for your project

Click Webhooks > Add webhook

For Payload URL, use the URL of the integration on Read the Docs, found on the project’s Admin > Integrations page. You may need to prepend https:// to the URL.

For Content type, both application/json and application/x-www-form-urlencoded work

Leave the Secrets field blank

Select Let me select individual events, and mark Branch or tag creation, Branch or tag deletion, Pull requests and Pushes events

Ensure Active is enabled; it is by default

Finish by clicking Add webhook. You may be prompted to enter your GitHub password to confirm your action.

You can verify if the webhook is working at the bottom of the GitHub page under Recent Deliveries. If you see a Response 200, then the webhook is correctly configured. For a 403 error, it’s likely that the Payload URL is incorrect.

Note

The webhook token, intended for the GitHub Secret field, is not yet implemented.

Bitbucket¶

Go to the Settings > Webhooks > Add webhook page for your project

For URL, use the URL of the integration on Read the Docs, found on the Admin > Integrations page

Under Triggers, Repository push should be selected

Finish by clicking Save

GitLab¶

Go to the Settings > Webhooks page for your project

For URL, use the URL of the integration on Read the Docs, found on the Admin > Integrations page

Leave the default Push events selected, additionally mark Tag push events and Merge request events.

Finish by clicking Add Webhook

Gitea¶

These instructions apply to any Gitea instance.

Warning

This isn’t officially supported, but using the “GitHub webhook” is an effective workaround, because Gitea uses the same payload as GitHub. The generic webhook is not compatible with Gitea. See issue #8364 for more details. Official support may be implemented in the future.

On Read the Docs:

Manually create a “GitHub webhook” integration (this will show a warning about the webhook not being correctly set up, that will go away when the webhook is configured in Gitea)

On your Gitea instance:

Go to the Settings > Webhooks page for your project on your Gitea instance

Create a new webhook of type “Gitea”

For URL, use the URL of the integration on Read the Docs, found on the Admin > Integrations page

Leave the default HTTP Method as POST

For Content type, both application/json and application/x-www-form-urlencoded work

Leave the Secret field blank

Select Choose events, and mark Branch or tag creation, Branch or tag deletion and Push events

Ensure Active is enabled; it is by default

Finish by clicking Add Webhook

Test the webhook with Delivery test

Finally, on Read the Docs, check that the warnings have disappeared and the delivery test triggered a build.

Using the generic API integration¶

For repositories that are not hosted with a supported provider, we also offer a generic API endpoint for triggering project builds. Similar to webhook integrations, this integration has a specific URL, which can be found on the project’s Integrations dashboard page (Admin > Integrations).

Token authentication is required to use the generic endpoint, you will find this token on the integration details page. The token should be passed in as a request parameter, either as form data or as part of JSON data input.

Parameters¶

This endpoint accepts the following arguments during an HTTP POST:

- branches

The names of the branches to trigger builds for. This can either be an array of branch name strings, or just a single branch name string.

Default: latest

- token

The integration token found on the project’s Integrations dashboard page (Admin > Integrations).

For example, the cURL command to build the dev branch, using the token

1234, would be:

curl -X POST -d "branches=dev" -d "token=1234" https://readthedocs.org/api/v2/webhook/example-project/1/

A command like the one above could be called from a cron job or from a hook inside Git, Subversion, Mercurial, or Bazaar.

Authentication¶

This endpoint requires authentication. If authenticating with an integration token, a check will determine if the token is valid and matches the given project. If instead an authenticated user is used to make this request, a check will be performed to ensure the authenticated user is an owner of the project.

Debugging webhooks¶

If you are experiencing problems with an existing webhook, you may be able to use the integration detail page to help debug the issue. Each project integration, such as a webhook or the generic API endpoint, stores the HTTP exchange that takes place between Read the Docs and the external source. You’ll find a list of these exchanges in any of the integration detail pages.

Resyncing webhooks¶

It might be necessary to re-establish a webhook if you are noticing problems. To resync a webhook from Read the Docs, visit the integration detail page and follow the directions for re-syncing your repository webhook.

Payload validation¶

If your project was imported through a connected account, we create a secret for every integration that offers a way to verify that a webhook request is legitimate. Currently, GitHub and GitLab offer a way to check this.

Troubleshooting¶

Webhook activation failed. Make sure you have the necessary permissions¶

If you find this error, make sure your user has permissions over the repository. In case of GitHub, check that you have granted access to the Read the Docs OAuth App to your organization.

My project isn’t automatically building¶

If your project isn’t automatically building, you can check your integration on Read the Docs to see the payload sent to our servers. If there is no recent activity on your Read the Docs project webhook integration, then it’s likely that your VCS provider is not configured correctly. If there is payload information on your Read the Docs project, you might need to verify that your versions are configured to build correctly.

Either way, it may help to either resync your webhook integration (see Resyncing webhooks for information on this process), or set up an entirely new webhook integration.

Custom Domains¶

Custom domains allow you to serve your documentation from your own domain. This is great for maintaining a consistent brand for your documentation and application.

By default, your documentation is served from a Read the Docs subdomain using the project’s slug:

<slug>.readthedocs.iofor Read the Docs Community<slug>.readthedocs-hosted.comfor Read the Docs for Business.

For example if you import your project and it gets the slug example-docs, it will be served from https://example-docs.readthedocs.io.

Contents

Adding a custom domain¶

To setup your custom domain, follow these steps:

Go the Admin tab of your project.

Click on Domains.

Enter your domain.

Mark the Canonical option if you want use this domain as your canonical domain.

Click on Add.

At the top of the next page you’ll find the value of the DNS record that you need to point your domain to. For Read the Docs Community this is

readthedocs.io, and for Read the Docs for Business the record is in the form of<hash>.domains.readthedocs.com.Note

For a subdomain like

docs.example.comadd a CNAME record, and for a root domain likeexample.comuse an ANAME or ALIAS record.

By default, we provide a validated SSL certificate for the domain, managed by Cloudflare. The SSL certificate issuance should happen within a few minutes, but might take up to one hour. See SSL certificate issue delays for more troubleshooting options.

As an example, our blog’s DNS record looks like this:

dig +short CNAME blog.readthedocs.com

readthedocs.io.

Warning

We don’t support pointing subdomains or root domains to a project using A records. DNS A records require a static IP address and our IPs may change without notice.

Removing a custom domain¶

To remove a custom domain:

Go the Admin tab of your project.

Click on Domains.

Click the Remove button next to the domain.

Click Confirm on the confirmation page.

Warning

Once a domain is removed, your previous documentation domain is no longer served by Read the Docs, and any request for it will return a 404 Not Found!

Strict Transport Security (HSTS) and other custom headers¶

By default, we do not return a Strict Transport Security header (HSTS) for user custom domains. This is a conscious decision as it can be misconfigured in a not easily reversible way. For both Read the Docs Community and Read the Docs for Business, HSTS and other custom headers can be set upon request.

We always return the HSTS header with a max-age of at least one year

for our own domains including *.readthedocs.io, *.readthedocs-hosted.com, readthedocs.org and readthedocs.com.

Please contact Site Support if you want to add a custom header to your domain.

Multiple documentation sites as sub-folders of a domain¶

You may host multiple documentation repositories as sub-folders of a single domain.

For example, docs.example.org/projects/repo1 and docs.example.org/projects/repo2.

This is a way to boost the SEO of your website.

See Subprojects for more information.

Troubleshooting¶

SSL certificate issue delays¶

The status of your domain validation and certificate can always be seen on the details page for your domain under Admin > Domains > YOURDOMAIN.TLD (details).

Domains are usually validated and a certificate issued within minutes. However, if you setup the domain in Read the Docs without provisioning the necessary DNS changes and then update DNS hours or days later, this can cause a delay in validating because there is an exponential back-off in validation.

Tip

Loading the domain details in the Read the Docs dashboard and saving the domain again will force a revalidation.

Migrating from GitBook¶

If your custom domain was previously used in GitBook, contact GitBook support (via live chat in their website) to remove the domain name from their DNS Zone in order for your domain name to work with Read the Docs, else it will always redirect to GitBook.

Versioned Documentation¶

Read the Docs supports multiple versions of your repository.

On initial import,

we will create a latest version.

This will point at the default branch defined in your VCS control

(by default, main on Git and default in Mercurial).

If your project has any tags or branches with a name following semantic versioning,

we also create a stable version, tracking your most recent release.

If you want a custom stable version,

create either a tag or branch in your project with that name.

When you have VCS Integrations configured for your repository, we will automatically build each version when you push a commit.

How we envision versions working¶

In the normal case,

the latest version will always point to the most up to date development code.

If you develop on a branch that is different than the default for your VCS,

you should set the Default Branch to that branch.

You should push a tag for each version of your project.

These tags should be numbered in a way that is consistent with semantic versioning.

This will map to your stable branch by default.

Note

We in fact are parsing your tag names against the rules given by

PEP 440. This spec allows “normal” version numbers like 1.4.2 as

well as pre-releases. An alpha version or a release candidate are examples

of pre-releases and they look like this: 2.0a1.

We only consider non pre-releases for the stable version of your

documentation.

If you have documentation changes on a long-lived branch, you can build those too. This will allow you to see how the new docs will be built in this branch of the code. Generally you won’t have more than 1 active branch over a long period of time. The main exception here would be release branches, which are branches that are maintained over time for a specific release number.

Version States¶

States define the visibility of a version across the site. You can change the states of a version from the Versions tab of your project.

Active¶

Active

Docs for this version are visible

Builds can be triggered for this version

Inactive

Docs for this version aren’t visible

Builds can’t be triggered for this version

When you deactivate a version, its docs are removed.

Privacy levels¶

Note

Privacy levels are only supported on Read the Docs for Business.

Public¶

It means that everything is available to be seen by everyone.

Private¶

Private versions are available only to people who have permissions to see them. They will not display on any list view, and will 404 when you link them to others. If you want to share your docs temporarily, see Sharing.

In addition, if you want other users to view the build page of your public versions, you’ll need to the set the privacy level of your project to public.

Logging out¶

When you log in to a documentation site, you will be logged in until close your browser. To log out, click on the Log out link in your documentation’s flyout menu. This is usually located in the bottom right or bottom left, depending on the theme design. This will log you out from the current domain, but not end any other session that you have active.

Version warning¶

This is a banner that appears on the top of every page of your docs that aren’t stable or latest. This banner has a text with a link redirecting the users to the latest version of your docs.

This feature is disabled by default on new projects, you can enable it in the admin section of your docs (Admin > Advanced Settings).

Note

The banner will be injected in an HTML element with the main role or in the main tag.

For example:

<div role="main">

<!-- The banner would be injected here -->

...

</div>

<main>

<!-- The banner would be injected here -->

...

</main>

Redirects on root URLs¶

When a user hits the root URL for your documentation,

for example https://pip.readthedocs.io/,

they will be redirected to the Default version.

This defaults to latest,

but could also point to your latest released version.

Downloadable Documentation¶

Read the Docs supports building multiple formats for Sphinx-based projects:

PDF

ePub

Zipped HTML

This means that every commit that you push will automatically update your PDFs as well as your HTML.

This is enabled by the formats key in our config file. A simple example is here:

# Build PDF & ePub

formats:

- epub

- pdf

If you want to see an example, you can download the Read the Docs documentation in the following formats:

Use cases¶

This functionality is great for anyone who needs documentation when they aren’t connected to the internet. Users who are about to get on a plane can grab a PDF and have the entire doc set ready for their trip.

The other value is having the entire docset in a single file. You can send a user an email with a single PDF or ePub and they’ll have all the docs in one place.

Deleting downloadable content¶

The entries in the Downloads section of your project dashboard reflect the formats specified in your config file for each active version.

This means that if you wish to remove downloadable content for a given version, you can do so by removing the matching formats key from your config file.

Documentation Hosting Features¶

The main way that users interact with your documentation is via the hosted HTML that we serve. We support a number of important features that you would expect for a documentation host.

Contents

Subdomain support¶

Every project has a subdomain that is available to serve its documentation based on it’s slug.

If you go to <slug>.readthedocs.io, it should show you the latest version of your documentation,

for example https://docs.readthedocs.io.

For Read the Docs for Business the subdomain looks like <slug>.readthedocs-hosted.com.

See also

Content Delivery Network (CDN)¶

A CDN is used for making documentation pages faster for your users. This is done by caching the documentation page content in multiple data centers around the world, and then serving docs from the data center closest to the user.

We support CDN’s on both of our sites, as we talk about below.

On Read the Docs Community, we are able to provide a CDN to all the projects that we host. This service is graciously sponsored by CloudFlare.

We bust the cache on the CDN when the following actions happen:

Your Project is saved

Your Domain is saved

A new version is built

On Read the Docs for Business, we offer a CDN as part of our Pro plan and above. Please contact support@readthedocs.com to discuss how we can enable this for you.

Sitemaps¶

Sitemaps allows us to inform search engines about URLs that are available for crawling and communicate them additional information about each URL of the project:

when it was last updated,

how often it changes,

how important it is in relation to other URLs in the site, and

what translations are available for a page.

Read the Docs automatically generates a sitemap for each project that hosts

to improve results when performing a search on these search engines.

This allow us to prioritize results based on the version number, for example

to show stable as the top result followed by latest and then all the project’s

versions sorted following semantic versioning.

Custom Not Found (404) Pages¶

If you want your project to use a custom page for not found pages instead of the “Maze Found” default,

you can put a 404.html at the top level of your project’s HTML output.

When a 404 is returned,

Read the Docs checks if there is a 404.html in the root of your project’s output

corresponding to the current version

and uses it if it exists.

Otherwise, it tries to fall back to the 404.html page

corresponding to the default version of the project.

We recommend the sphinx-notfound-page extension,

which Read the Docs maintains.

It automatically creates a 404.html page for your documentation,

matching the theme of your project.

See its documentation for how to install and customize it.

Custom robots.txt Pages¶

robots.txt files allow you to customize how your documentation is indexed in search engines. We automatically generate one for you, which automatically hides versions which are set to Hidden.

The robots.txt file will be served from the default version of your Project.

This is because the robots.txt file is served at the top-level of your domain,

so we must choose a version to find the file in.

The default version is the best place to look for it.

Sphinx and Mkdocs both have different ways of outputting static files in the build:

Sphinx¶

Sphinx uses html_extra_path option to add static files to the output.

You need to create a robots.txt file and put it under the path defined in html_extra_path.

MkDocs¶

MkDocs needs the robots.txt to be at the directory defined at docs_dir config.

Server Side Search¶

Read the Docs provides full-text search across all of the pages of all projects, this is powered by Elasticsearch. You can search all projects at https://readthedocs.org/search/, or search only on your project from the Search tab of your project.

We override the default search engine of your project with our search engine to provide you better results within your project. We fallback to the built-in search engine from your project if our search engine doesn’t return any results, just in case we missed something 😄

Search features¶

We offer a number of benefits compared to other documentation hosts:

- Search across subprojects

Subprojects allow you to host multiple discrete projects on a single domain. Every subproject hosted on that same domain is included in the search results of the main project.

- Search results land on the exact content you were looking for